Inpaint Anything is a powerful extension for the Stable Diffusion WebUI that allows you to manipulate and modify images in incredible ways.

With Inpaint Anything, you can seamlessly remove, replace, or edit specific objects within an image with precision.

Whether you’re working in the img2img tab with stable diffusion inpainting or exploring other features, this extension boosts your artistic expression.

So, without delay let’s first install the extension.

How to Install Inpaint Anything Extension

Before installation make sure you have installed AUTOMATIC1111.

If you have not installed it yet, first install it by reading our guide on Automatic1111.

Okay, if you have, then follow these simple steps to get started:

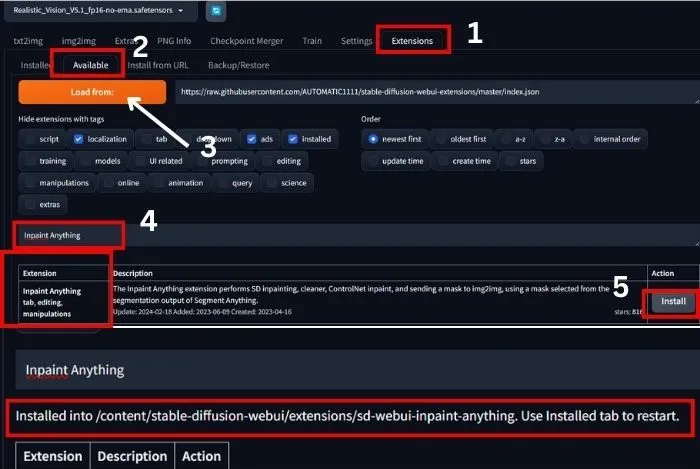

1. First, check whether the extension is available or not.

2. To check this, go to the extension and click ‘Available’, and then ‘load from’.

3. Now search for ‘Inpaint Anything’, and you can see the extension and an Install button just right of the extension.

4. Click on ‘Install’ and wait for processing until you see the notice for restart.

5. Now, go to the Installed tab and then restart the WebUI.

That’s it. If you do not find the extension in the ‘Available’ tab, follow the steps below to install it from the URL:

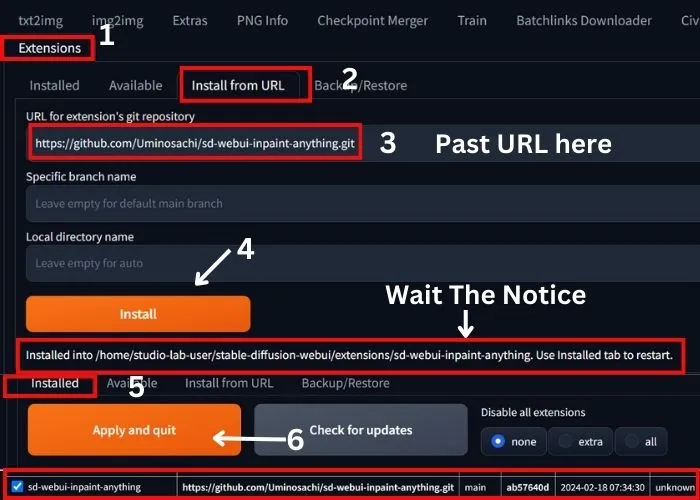

1. Head to the Extensions tab on AUTOMATIC1111’s Stable Diffusion Web UI.

2. Look for the Install from URL option.

3. Copy and paste `https://github.com/Uminosachi/sd-webui-inpaint-anything.git` into the URL field.

4. Hit the Install button to kick off the installation.

5. Once done, restart the Web UI to apply changes.

Note: Ensure you’re using version 1.3.0 or later of AUTOMATIC1111’s Stable Diffusion Web UI.

If you want to use memory-efficient xformers, you may read our guide on how to use Xformers to speed up the stable diffusion image generation process.

Okay, now let’s download the models for the extension and start our creative journey.

Download Model for Inpaint Anything

1. Navigate to the Inpaint Anything tab within the Web UI.

2. Find the Download model button next to the Segment Anything Model ID.

3. Click on “Download Model” and wait for a while to complete the download.

4. Once downloaded, you’ll find the model file in the models’ directory and can see the following notice.

So, we have installed the extension and the models; now let’s move on to using it.

How to Use Inpaint Anything Extension

1. After opening your automatic1111 WebUI, go to ‘Inpaint Anything’ and upload the image that you want to edit in the ‘Input image’ canvas.

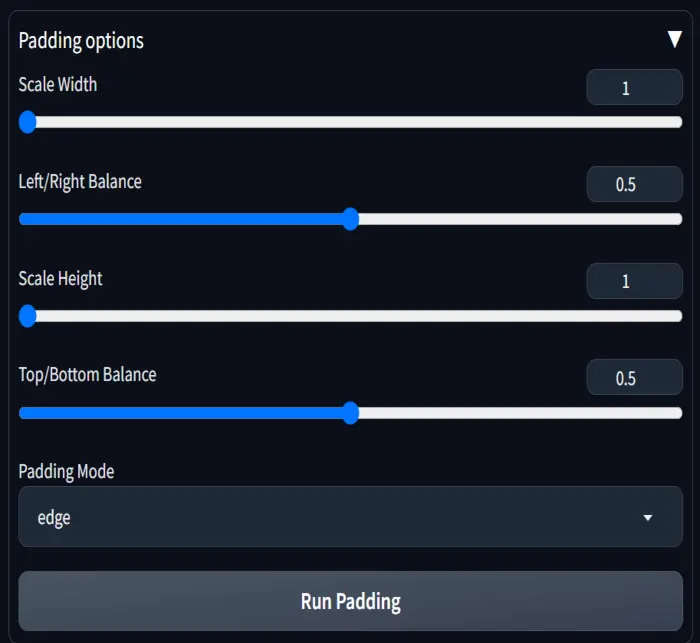

2. Now, just below your uploaded image, you will find the ‘Padding’ option.

Here you can adjust the height and width of your canvas and also adjust the position of the outcome by sliding right/left and top/bottom balance.

For all the padding options, you can use the following values:

- Scale Width and height: 1

- Right/Left and Top/Bottom balance: 0.5

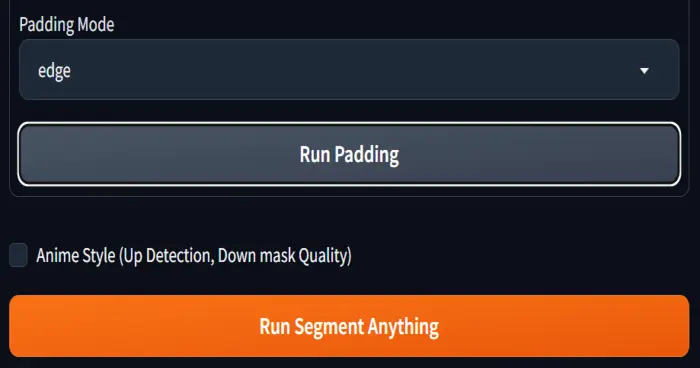

3. After fixing your padding, you will see the ‘Padding mode’ section just below the padding option.

There are 6 padding modes, but from my experience, all of these work similarly, so you can choose any of them.

4. Okay, after selecting the padding mode, click on ‘Run padding’.

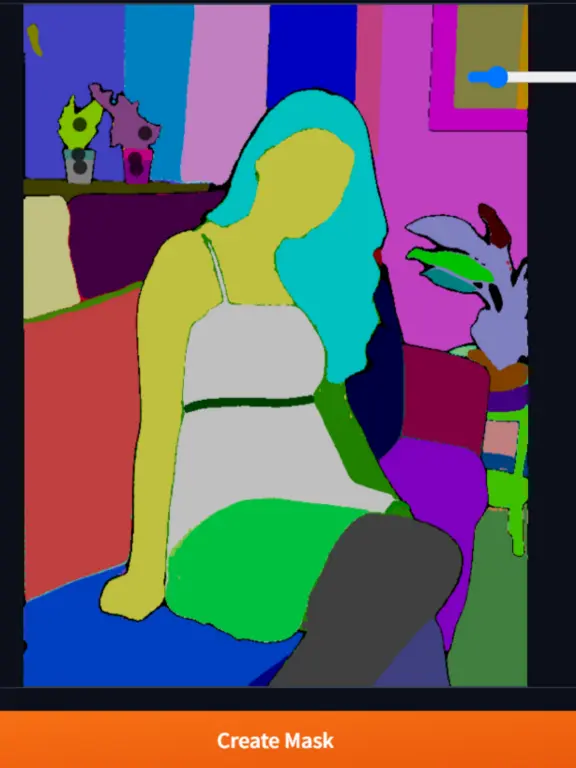

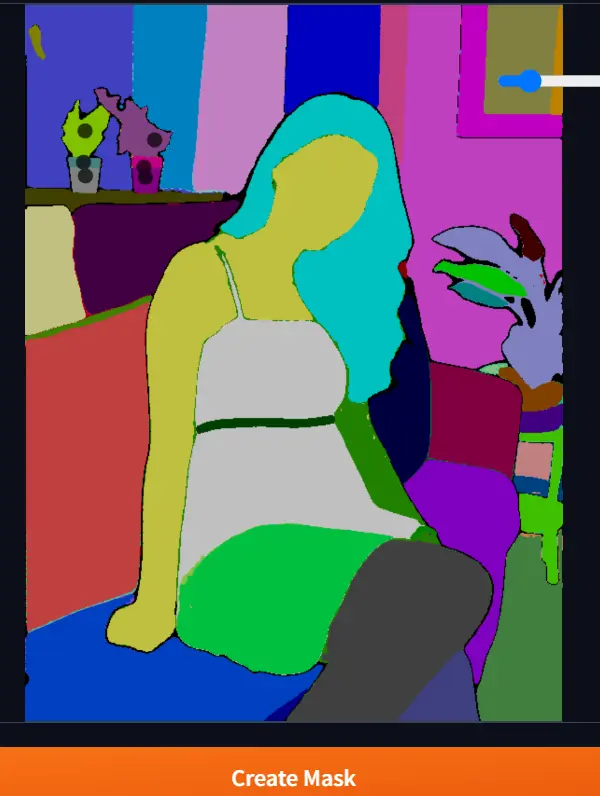

5. If your image is related to Anime, then check the box ‘Anime Style’; otherwise, click on “Run Segment Anything,” and you will get the detection map of the uploaded image in the right panel of the WebUI.

This detection map actually detects all parts of the image, highlighted by different colors, as you can see below.

If you need a closer look at the detection map, you can press the ‘S’ key for fullscreen mode and ‘R’ for zoom reset.

Now, we should mask the area for further work.

The cool thing about this extension is that you don’t need to mask the entire area you want to inpaint, unlike img2img inpainting, because the detection map already shows all parts in different colors.

6. That’s why, if I want to change the character’s clothing, I just dot the parts of the clothing like below. Notice that I don’t mark the belt.

After marking, click on ‘Create mask’.

You can see the masked area just below the panel.

Always go with ‘ignore black area’ instead of ‘invert mask’. If you choose ‘invert mask’, the model will mask only the area that you don’t select.

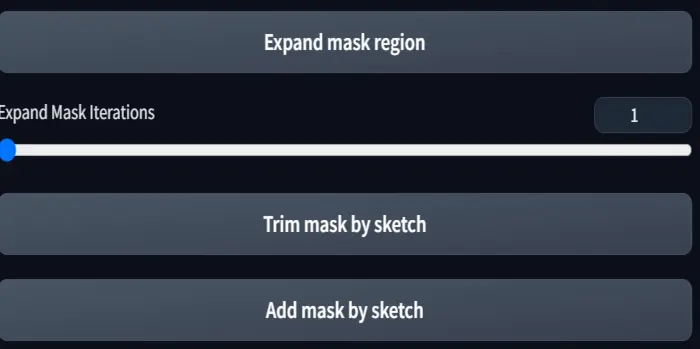

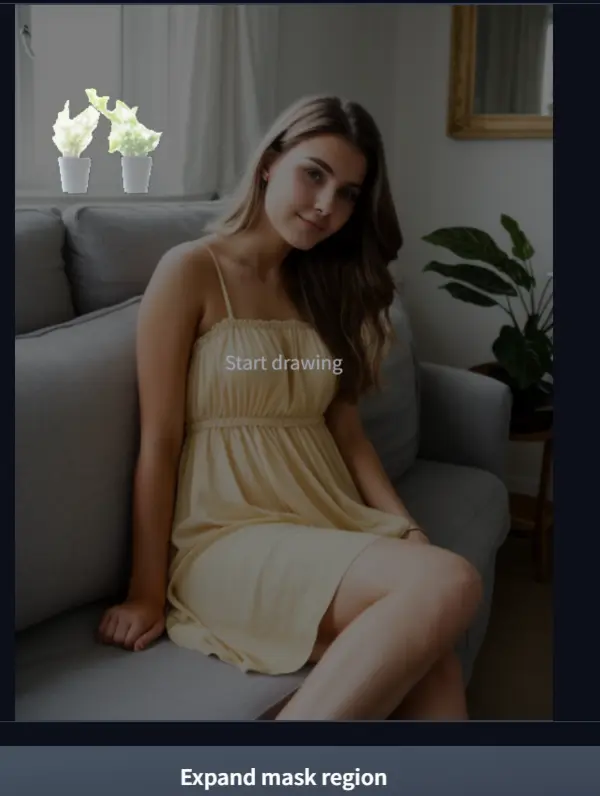

If you are not satisfied with the masked area, click on ‘Expand mask region’; it will increase the masked area. You can use this option until you get your desired mask.

For your convenience, I have used it two times, and below are the results:

In my case, I like to go with 1st one.

Here, you can also add and trim the mask by clicking ‘Add mask by sketch’ and ‘Trim mask by sketch,’ respectively.

After completing all these steps, go back to the left panel and you will find four options for further work, just below the “Run Segment Anything”.

Now, let’s discuss each of them step by step.

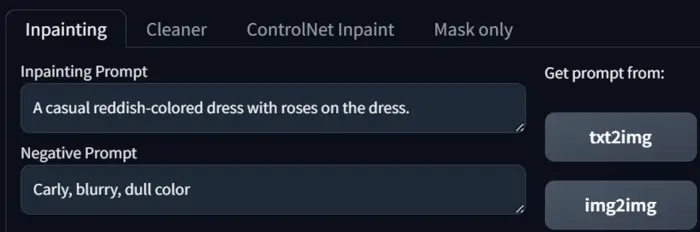

1. Inpainting Tab:

Here, you should first add the prompt and the negative prompt.

You can also obtain the prompt of your uploaded image by clicking the ‘txt2img’ or ‘img2img’ buttons located just to the right of the prompt and negative prompt boxes, respectively.

Since I want to change the cloth, I am using the following prompt and negative prompt.

Prompt: “A casual reddish-colored dress with roses on the dress.”

Negative prompt: Carly, blurry, dull color

Now, below the prompt box, you can find the advanced options.

Check the box ‘Mask area only’ if you want to work with the masking area.

Next is the sampler; here, you can choose the sampler model for the inpainting. In my case, I am selecting Euler.

Adjust the sampling steps, but remember, if you choose a higher value, it may take a longer time to generate the image.

So, in our opinion, the optimal range is from 20 to 35.

Next is CFG scale, which defines how much freedom you give the model to work with your prompt.

A lower value means your prompt is more important, while a higher value gives the model more creative freedom. The best range is from 6 to 9.

If you need a random composition, select -1 for the seed; otherwise, you can input any desired seed here.

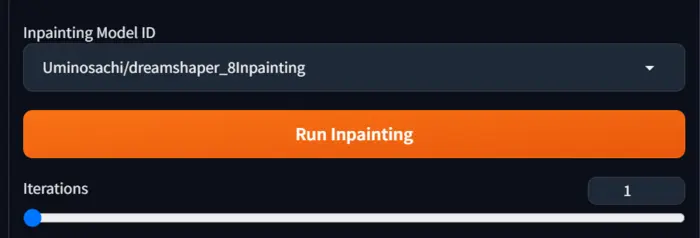

Below the advanced option,

you can choose the ‘Inpainting Model ID’ and select the ‘iterations,’ which basically define how many inpainted images you want in the output.

After adjusting all of these settings, click the ‘Run Inpainting’ button.

You can see how the extension understands my prompt as well as the masking area and generates a quality image.

Since I didn’t mark the belt of the dress, it remains the same as in the uploaded image.

Now, I want to see how other Model IDs work; that’s why I selected the RealisticVision Model ID and opted for 3 ‘iterations’.

You can see that the results are almost the same but have different styles.

So, it is your choice which Inpainting Model ID to use. In our opinion, choose an ID that matches your checkpoint model.

Now, let’s move to the next tab…

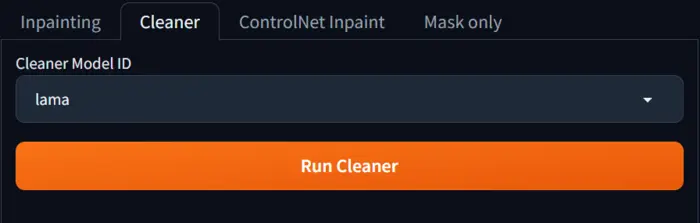

2. Cleaner tab:

Just right of the inpainting tab, the cleaner tab is available in the “Inpaint Anything” extension.

As its name suggests, it can be used to remove objects.

To do this, I dotted the tops in front of the window of my uploaded image and created the mask as you can see below.

Now select the ‘Cleaner model ID’, in my case, I run 3 model IDs, and below are the results:

You can see only the lama model ID is working well a little bit.

So, our recommendation is if you want to remove anything from your image, use img2img inpainting instead of the Anything Inpainting extension.

Okay, now let’s see how the extension works with ControlNet inpainting.

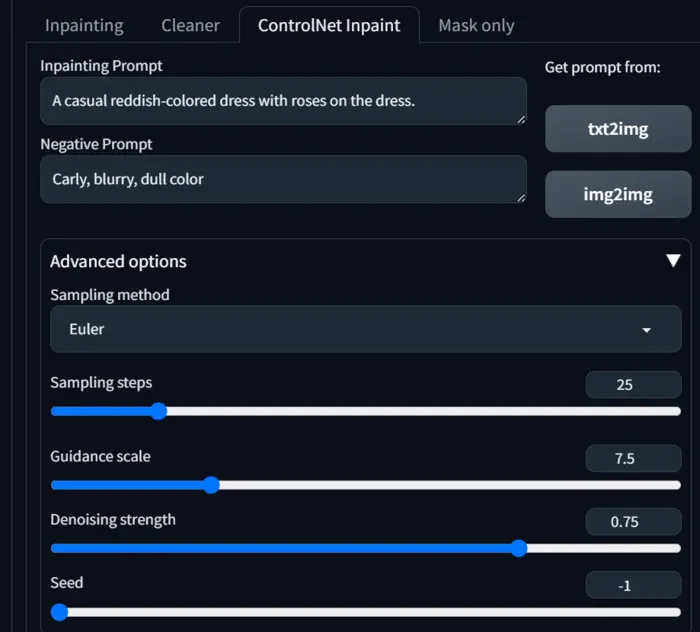

3. ControlNet Inpainting:

You can only see the tab when you install the ControlNet in your webUI.

The tab is just right of the ‘cleaner’ tab. When you click on it, you can see almost the same options as we have seen in the ‘Inpainting’ tab, except for Denoising strength.

So, adjust all of them as I stated earlier.

Denoising strength: It controls how much the algorithm adheres to the original image content while generating the inpainted area.

You can use a high value (0.75+) where you want minimal deviation from the original image.

And for more creative freedom and less strict adherence to the original content try to use Lower denoising strength (0.5).

So, experiment with different values based on your desired outcome, in my case, I am going with 0,75.

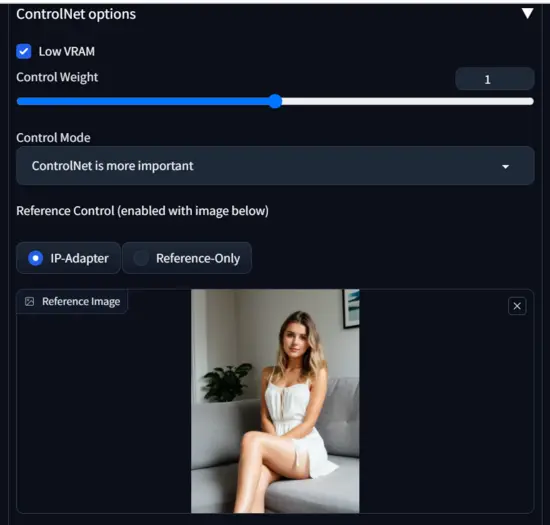

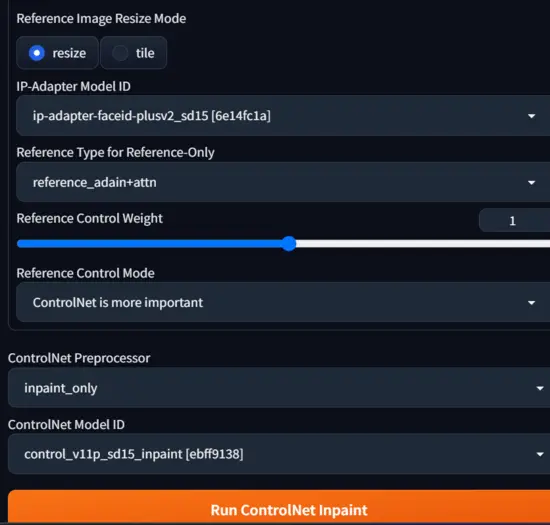

Now, below the Denoising strength, you’ll find the ControlNet options.

Low VRAM: check the box if your GPU has low memory; otherwise, you may uncheck it.

Control Weight: It determines how much control you give to the ControlNet.

If you want to replicate the composition from the reference image uploaded in the ‘Reference Image’ canvas, choose a value higher than 1.

Control Mode: Since you are using a reference image, select ‘Controlnet is more important’.

Reference Control: Currently, we have two options; for now, I am selecting IP-Adapter.

Reference Control Weight: It determines how much your reference image influences the output.

Opt for values higher than 1 if you truly want to replicate the reference image’s composition.

After adjusting all the above settings, select your preferred Reference Control, ControlNet processor, and Model ID, then click the ‘Run ControlNet Inpaint’ button.

Below is my result for the above reference image with Reference Control IP-Adapter.

You can see since I am using a reference image, I selected ‘Controlnet is more important,’ but still, ControlNet just follows my prompt.

There is zero value of the reference image.

So, to fix it, select ‘Reference Only’ from Reference Control and increase the value of Reference Control Weight.

In my case, I am generating with Reference Control Weight 1.5 and 1.7.

Now, you can see that ControlNet is following the reference image. but there is an error in the left leg.

You can easily fix this type of issue by using ADetailer.

So, experiment with various Reference Control weights and find your desired one.

For now, let’s move to the final tab.

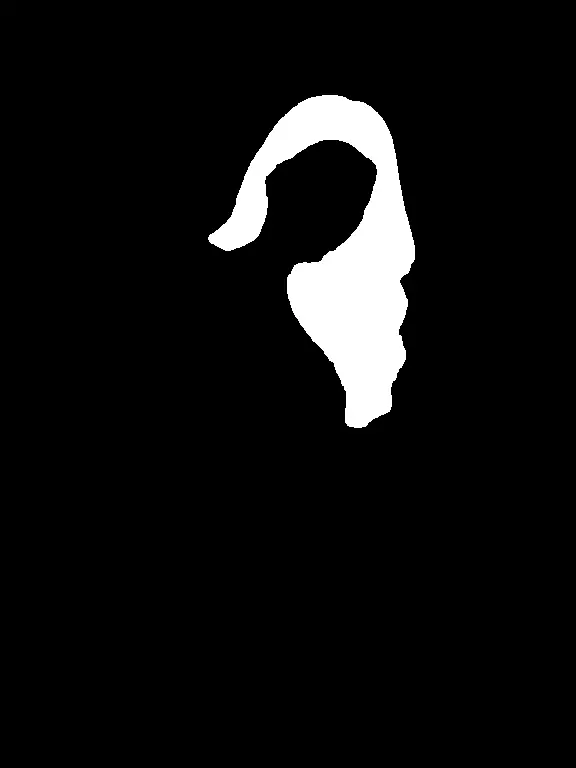

4. Mask Only:

If you regularly use inpainting in img2img, then you know that masking is a crucial part, and sometimes, if you go with a larger masking area, the results become annoying.

To avoid this, you can use the mask tab in the Inpaint Anything extension.

To do this, dot the color you want to mask as I stated earlier, and send it to the inpaint of img2img.

Here you will get a mask image and an Alpha Chanel image.

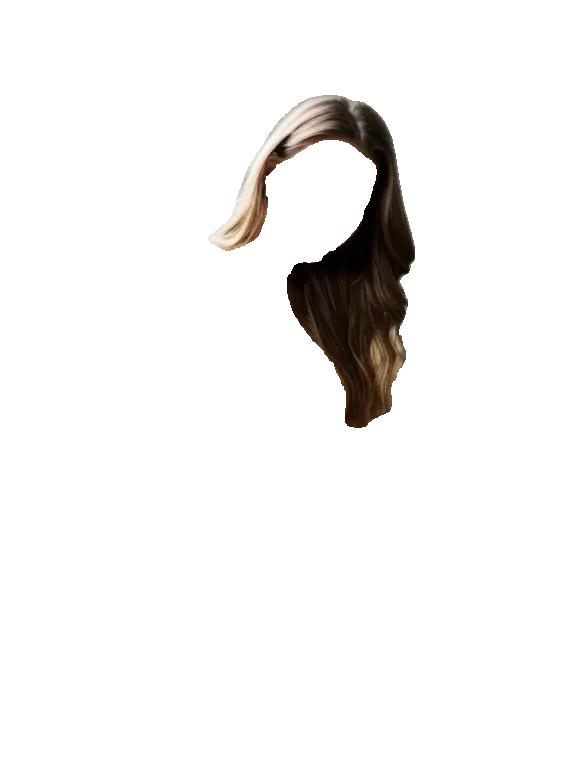

Now, give the prompt and negative prompt, adjust all the settings and parameters, and hit the generate button.

In my case, I select hair to mask and send it to the inpaint.

There, I paste the prompt “Pink hair” and hit the generate button.

You can see how great the hair looks.

If you follow all the steps I discussed in the post, you’ll definitely be able to use Inpaint Anything like a pro.

That’s all for today about the Inpaint Anything extension. If I missed something, please let me know by commenting below.

Reference: GitHub Repository.

Hi there! I’m Zaro, the passionate mind behind aienthusiastic.com. With a background in Electronics Science, I’ve had the privilege of delving deep into AI and ML. And this blog is my platform to share my enthusiasm with you.